Information on Kinect

Research.Kinect History

Hide minor edits - Show changes to markup

- RGBDemo, software to calibrate and visualize the output of a Kinect

- RGBDemo, software to calibrate and visualize the output of a Kinect

- RGBDemo, software to calibrate and visualize the output of a Kinect

(:redirect [[Research.KinectCalibration]]:)

Note that another transformation claimed to be more accurate was proposed there: [http://groups.google.com/group/openkinect/browse_thread/thread/31351846fd33c78/e98a94ac605b9f21?lnk=gst&q=stephane#e98a94ac605b9f21 Stephane Magnenat post] but I haven’t tested it yet.

Note that another transformation claimed to be more accurate was proposed there: Stephane Magnenat post but I haven’t tested it yet.

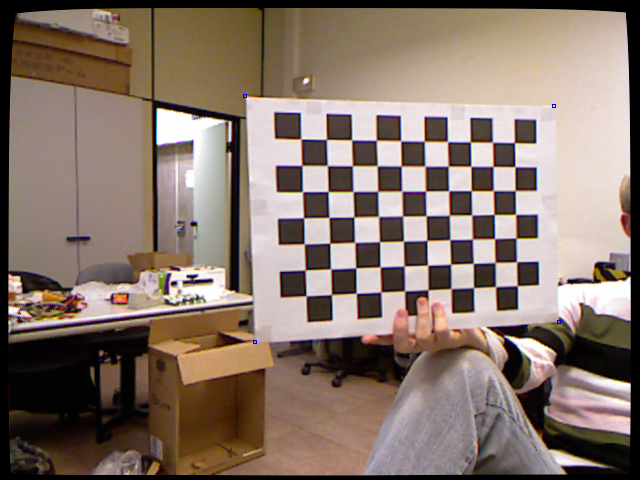

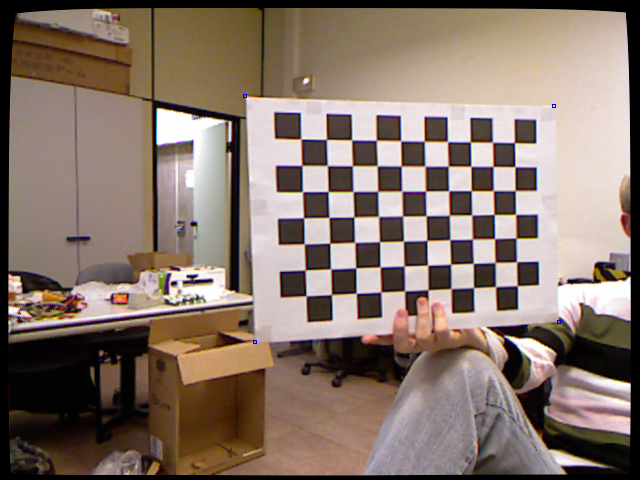

Stereo calibration

Selecting also the corners of the chessboard on the color images (they would also be easy to extract automatically using the chessboard recognition), standard stereo calibration can be performed.

Mapping depth pixels with color pixels

The first step is to undistort rgb and depth images using the estimated distortion coefficients. Then, using the depth camera intrinsics, each pixel (x_d,y_d) of the depth camera can be projected to metric 3D space using the following formula:

Transformation of raw depth values into meters

Raw depth values are integer between 0 and 2047. They can be transformed into depth in meters using the parameters given on the Ros Kinect page.

P3D.x = (x_d - cx_d) * depth(x_d,y_d) / fx_d P3D.y = (y_d - cy_d) * depth(x_d,y_d) / fy_d P3D.z = depth(x,y)

float raw_depth_to_meters(int raw_depth) {

if (raw_depth < 2047)

{

return 1.0 / (raw_depth * −0.0030711016 + 3.3309495161);

}

return 0;

}

with fx_d, fy_d, cx_d and cy_d the intrinsics of the depth camera.

We can then reproject each 3D point on the color image and get its color:

Note that another transformation claimed to be more accurate was proposed there: [http://groups.google.com/group/openkinect/browse_thread/thread/31351846fd33c78/e98a94ac605b9f21?lnk=gst&q=stephane#e98a94ac605b9f21 Stephane Magnenat post] but I haven’t tested it yet.

Stereo calibration

Selecting also the corners of the chessboard on the color images (they would also be easy to extract automatically using the chessboard recognition), standard stereo calibration can be performed.

Mapping depth pixels with color pixels

The first step is to undistort rgb and depth images using the estimated distortion coefficients. Then, using the depth camera intrinsics, each pixel (x_d,y_d) of the depth camera can be projected to metric 3D space using the following formula:

P3D’ = R.P3D + T P 2 D_rgb.x = (P3D’.x * fx_rgb / P3D’.z) + cx_rgb P 2 D_rgb.y = (P3D’.y * fy_rgb / P3D’.z) + cy_rgb

P3D.x = (x_d - cx_d) * depth(x_d,y_d) / fx_d P3D.y = (y_d - cy_d) * depth(x_d,y_d) / fy_d P3D.z = depth(x,y)

with R and T the rotation and translation parameters estimated during the stereo calibration.

The parameters I could estimate for my Kinect are:

- Color

with fx_d, fy_d, cx_d and cy_d the intrinsics of the depth camera.

We can then reproject each 3D point on the color image and get its color:

Also check this webpage for more information on automatic calibration: [http://www.ros.org/wiki/kinect_node|Ros Kinect]

Also check this webpage for more information on automatic calibration: Ros Kinect

Here is a preliminary semi-automatic way to calibrate the Kinect depth sensor and the rgb output to enable a mapping between them. You can see a result there:

Here is a preliminary semi-automatic way to calibrate the Kinect depth sensor and the rgb output to enable a mapping between them. You can see some results there:

Also check this webpage for more information on automatic calibration: [http://www.ros.org/wiki/kinect_node|Ros Kinect]

ROS calibration files

ROS calibration files

ROS calibration files

Here are some calibration files in ROS (http://code.ros.org) format. Attach:calibration_rgb.yaml Attach:calibration_depth.yaml

Here is a preliminary semi-automatic way to calibrate the Kinect depth sensor and the rgb output to enable a mapping between them. You can see a result there: (:youtube BVxKvZKKpds:)

Here is a preliminary semi-automatic way to calibrate the Kinect depth sensor and the rgb output to enable a mapping between them. You can see a result there: (:youtube BVxKvZKKpds:)

Here is a preliminary semi-automatic way to calibrate the Kinect depth sensor and the rgb output to enable a mapping between them.

Here is a preliminary semi-automatic way to calibrate the Kinect depth sensor and the rgb output to enable a mapping between them. You can see a result there: (:youtube BVxKvZKKpds:)